This post is a bit more technical in nature, though I think the first half may be interesting even to those who are not into Python and the inner workings of Roam data import.

What is Roam?

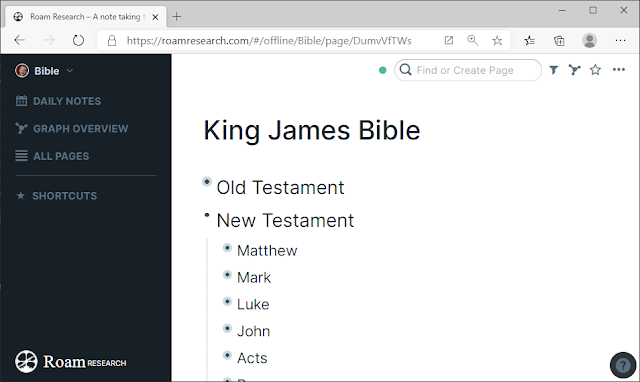

Roam is a bit like a personal Wiki. It is a note-taking application that supports networked thought. At its core, Roam is extremely simple to understand. A Roam database simply consists of pages and paragraphs. The paragraphs are displayed as bullet point lists which can be nested much like in an outliner. In Roam lingo paragraphs are called "blocks"

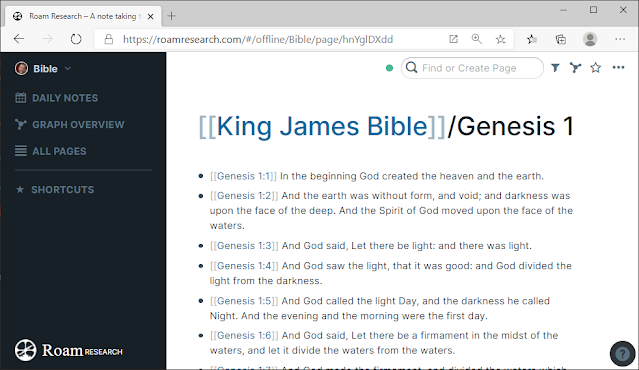

When writing, you can simply reference other pages using double square brackets. For example to reference my page in Roam for Zsolt's Blog I just write [[Zsolt's Blog]]. Now if I click on the reference between the brackets, Roam will take me to the other page.

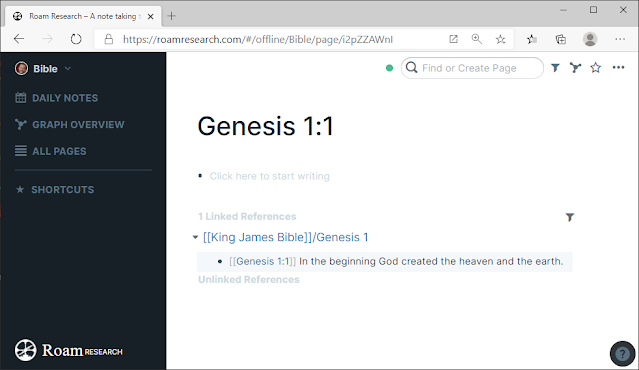

What makes Roam unique is how you can reference not only pages, but also blocks by entering the block's unique identifier in ((round brackets)). By creating such a reference, the text only exists once, in the original block, but it can appear on multiple pages. This is very useful when for example you want to quote some literature in a document that you are writing. If you do this, then with a simple click you can find the original location of the block and also all the other locations where you have quoted the block.

What did I want to achieve?

I wanted to create a simple Python script to import books into Roam. The most basic use-case for doing this is to study a body of text and to make references to quotes in my own writing in such a way, that I can easily trace back the references to the original text. For a more detailed explanation of one such use-case head over to my post about progressive summarization. If you want to read an actual book summary created using progressive summarization, check out The Checklist Manifesto.

Taking the idea one step further, it is not hard to imagine that by applying some natural language processing like Word2Vec (and its cousin Doc2Vec) it would be possible to pre-process a body of text by creating internal references based on semantical similarity between paragraphs. Block references in Roam would be an ideal way to work with such references.

Note: If you are unfamiliar with Word2Vec, don't worry, I won't go into any detail in this post. Also as you will soon learn, I didn't get that far with Roam (yet). If however, you are curious and want to read a very understandable introduction to the subject, I recommend reading Understanding word vectors. If you want to know more the web is full of very accessible tutorials about the concept of word vectors and natural language processing.

To demonstrate the type of outcome I am after, here's an example that I have implemented in TheBrain a while back (https://bra.in/4vmxmZ). The text used in the example is Jane Eyre: An Autobiography by Charlotte Brontë. The paragraphs of the book are linked in a parent-child chain following the flow of the text. The thoughts on the sides are lateral thoughts (called jumps in TheBrain), those were automatically generated using a Doc2Vec document model.

Today I was playing with porting the Python script I have developed for TheBrain to Roam. My target is to import the Bible such that each chapter has its own dedicated page, plus there is a cover page with a table of contents. This means importing 11901 individual pages into Roam. I actually started with a more ambitious plan to load ten different translations, but as you will see I ran into serious performance issues already loading only one.

What are my solution options?

Roam offers three file formats for uploading information. Markdown, JSON, and EDN. Using Markdown, Roam unfortunately supports only three pages to be imported at a time. Since I am trying to load potentially thousands of pages, doing it three at a time is clearly a non-starter. JSON does not have a similar limitation - at least it is not stated explicitly. EDN is the newest addition to the Roam input / output formats, it is the native Clojure format.

Since I am not familiar with EDN and my Python script for TheBrain was already developed using JSON, I opted for attempting the task in JSON. Also with JSON I had a head start thanks to David Bieber's excellent reference article: Roam Research's JSON Export Format.

The solution

Workplan

- Download the Bible

- Create Roam helper functions

- Load the Bible

- Export to Roam.json

1. Downloading the Bible

After some Googleing I decided to download the Text File database from http://biblehub.net. This contains ten translations. The tenth only has the New Testament. Downloading requires a free registration.

The text file has a header row containing the title of the translations.

Each line in the file starts with a verse reference (Genesis 1:1), followed by

the actual text in various translations delimited by TAB '\t'.

Translations included are:

- King James Bible

- American Standard Version

- Douay-Rheims Bible

- Darby Bible Translation

- English Revised Version

- Webster Bible Translation

- World English Bible

- Young's Literal Translation

- American King James Version

- Weymouth New Testament

2. Roam helper functions

I am declaring 4 functions

getRoamUID()

Generates a 9 character long UID containing upper and lower case letters and numbers.

getRoamTime()

Generates the current time in milliseconds since epoch format.

addPage(page title)

Creates a new page with the provided title and appends it to roam_json. Returns reference to the page created.

addChild(parent, block_string, heading=None,text_align=None)

Creates a child and appends it to the parent. The parent may be a page or a child node. block_string is the text contents of the block. heading (optional) has valid values of 1,2,3. Finally text_align (optional) has valid values of 'left', 'right', 'center', and 'justify'. The function returns reference to the child created.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

roam_json = [] email = 'foo@gmail.com' #helper functions !pip install exrex from exrex import getone import time #Generate 9 long UID containing random characters: a-z, A-Z, 0-9 def getRoamUID(): return (getone('[a-zA-Z0-9]{9}')) #Time in ms since epoch def getRoamTime(): return int(round(time.time() * 1000)) #Add a page object to roam_json def add_page (page_title): new_page = {'title' : page_title, 'children' : [], 'create-time' : getRoamTime(), 'create-email': email, 'edit-time' : getRoamTime(), 'edit-email' : email} roam_json.append(new_page) return new_page #Add a child object to a parent (parent may be a page or another child) def add_child(parent, block_string, heading=None, text_align=None): child = {'uid': getRoamUID(), 'string': block_string, 'children': [], 'create-time' : getRoamTime(), 'create-email': email, 'edit-time' : getRoamTime(), 'edit-email' : email} if heading is not None: child['heading'] = heading if text_align is not None: child['text-align'] = text_align parent['children'].append (child) return child |

3. Loading the Bible

Columns in the file are delimited with '\t'. The text includes some HTML markup which I clean with a very rudimentary regular expression '<.*?>'. Also for purpose of simplicity I will just assume anything outside utf-8 can simply be ignored. Thus using codecs with errors='ignore' switch.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

#mounting Google Drive from google.colab import drive drive.mount('/content/gdrive') #constants root = '/content/gdrive/' in_path = 'My Drive/Roam-playground/' out_path = 'My Drive/Roam-playground/output/' in_filename = 'bibles.txt' out_filename = 'bibles.json' #Load source text import codecs import re bible = [] html_cleanr = re.compile('<.*?>') #Remove <HTML> tags with codecs.open (root+in_path+in_filename,'r', encoding='utf-8', errors='ignore') as source: for line in source: line = re.sub(html_cleanr,'',line) fields = line.split('\t') bible.append(fields) #print header row: print(bible[0]) #print first verse using KJB translation print(bible[1][1]) |

4. Process the text and export to Roam.js

Looks a bit long, but really extremely simple (and not so neatly written). All I do is take the verse reference from column 0, split it into book, chapter and verse number. I keep track of which book and chapter I am processing and use this information to build the table of contents as I go. The text I write includes relevant [[Roam style references]] to support navigation once imported to Roam. At the end I dump the data into a .json file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 |

# compile regex that I will use to split verse references 'Genesis 1:1' # into book: 'Genesis', chapter: 1, and verse:1 # !!!! mind the gap: there are books that consist of multiple words like '1 Samuel 1:1' ''' verse_re = re.compile('(.+)\s(\d+):(\d+)') #put list of translations into dedicated variable just for better code readibility trans = bible[0][1:] #List of Bible translations in file. bible[0][0] == 'Verse' t_range = range(0,len(trans)) #Range for number of different translations ##remove trailing \r\n from translation names for i in t_range: trans[i] = trans[i].rstrip() # create output folder if it does not exist import os if not os.path.exists(root+out_path): os.mkdir(root+out_path) # This is going to be the TOC page where you will find Bible translations main_page = add_page('Bibles') # Iterate through all Bible translations # for t_num in t_range: ### Removed: it takes ages to load the text into Roam # recommend to process only one Bible at a time, # instead process only one translation t_num = 0 # King James, change for other translation # add tranlation to Bible TOC add_child(main_page,'[['+trans[t_num]+']]') # create a page for the Bible translation trans_page = add_page(trans[t_num]) # initialize prev_book, prev_chapter variables # use row 1, because row 0 is the heading v_ref = re.split(verse_re,bible[1][0]) book = prev_book = v_ref[1] chapter = prev_chapter = v_ref[2] # edit TOC for Translation page: Testament / Book / Chapter testament = add_child(trans_page,'Old Testament',1) book_child = add_child(testament,book,2) add_child(book_child,'[[[['+trans[t_num]+']]/'+book+' '+chapter+']]') # create page for chapter chapter_page = add_page('[['+trans[t_num]+']]/'+book+' '+chapter) # iterate the bible for verse in bible[1:]: v_ref = re.split(verse_re,verse[0]) # the first column in the row book = v_ref[1] chapter = v_ref[2] verse_num = v_ref[3] v = verse[t_num+1].rstrip() # if starting a new chapter or book: create a new page for the chapter if (book != prev_book) or (chapter != prev_chapter): # add link to next chapter add_child(chapter_page,'[[[['+trans[t_num]+']]/'+book+' '+chapter+']]') # maintain TOC if (book != prev_book): if (book == 'Matthew'): testament = add_child(trans_page,'New Testament',1) book_child = add_child(testament,book,2) add_child(book_child,'[[[['+trans[t_num]+']]/'+book+' '+chapter+']]') # create next chapter page chapter_page = add_page('[['+trans[t_num]+']]/'+book+' '+chapter) # set varialbes prev_book = book prev_chapter = chapter # if verse not empty add. Some translations miss couple of verses, or # number them differently if v!='': add_child(chapter_page,'[['+book+' '+chapter+':'+verse_num+']] ' + v) # Export import json with open(root+out_path+out_filename,'w') as f: json.dump(roam_json,f) |

Experiences

The resulting .json file for only a single Bible translation (KJB) is 102MB in size. The first time I ran the script I was very enthusiastic and included all ten translations in the export. The file was almost 1GB large.

Running the import with only one translation, there are 11901 pages to load.

An update since first posting this article:

Overnight I realized that I was creating double references for each verse by adding a verse reference such as [[Genesis 1:1]], [[Genesis 2:2]] in front of each verse (because they already have "invisible" block IDs as explained in the intro). Changing the following in the code

1 2 | #add_child(chapter_page,'[['+book+' '+chapter+':'+verse_num+']] ' + v) add_child(chapter_page,verse_num+': ' + v) |

has reduced the file size for KJB by ten fold to 10.5MB and the number of files to load to 1191. The reason is that Roam created a page for each of the verse references.

Also in conversation with Conor White-Sullivan (Co-founder of Roam Research) I learned, that upload speeds are deliberately throttled, and for the time being uploading a large corpus is discouraged.

After about an hour of processing Roam stops with an error.

Limiting the upload to 20 pages demonstrates that the roam.json file is indeed ok. This is how the end result looks like, but it only contains the skeleton of the Bible and the first 18 verses.

Conclusion

The .json import is not built to handle large volumes of data. I will research the EDN format and make a second attempt. If you want to play with the script, make sure you do it in an empty database. I created a local database for the purpose which I ended up having to delete multiple times.

Should you be interested in collaborating to making this work, contact me in the comments or on twitter.

Comments

Post a Comment